Mohit Nalavadi

I'm a bioengineer turned programmer with a curiosity for scientific computing, computer vision, biotech, and music applications.

I have 6+ years background in scientific python, ETL pipelines, and building data infrastructure (both cloud-native, on-premise, and hybrid). My experience comes through startups - going from prototypes to scalable production deploys complete with logging, monitoring, and end to end testing. I collaborate effectively with data scientists and DevOps teams to bridge the gap between engineering and data science.

On the side, I like experimenting with my own inventive projects, like a robotic microscope which generates gigapixel panoramic photos of tiny things, frequency analysis software which turns audio files into piano visualizations, and a wearable device which creates music from human physiology.

Overall, I just love building things.

Current: Data Scientist @ commercetools GmbH, Berlin, DE

Education: B.Eng Biomolecular Engineering @ Santa Clara University, California

Main Projects

Aposynthese

Aposynthese is a tool to give anyone perfect pitch. It converts mp3 files into piano visualizations, giving the user the ability to learn any song they like. It works by decomposing the song's underlying constituent frequencies with the Short Time Fourier Transform (STFT), and mapping dominant frequencies to real piano notes across time. The program also leverages some advanced Music Information Processing techniques, available through the librosa audio processing library. These include Harmonic-Percussive Source Separation (HPSS) to remove drum beats, and iterative cosine similarity to remove vocals. Additional custom signal processing cleans up the STFT spectrograms and allows smoother tonality mapping that mimics the human ear.

Hyperscope

[Work in progress...] Hyperscope is a low-cost robotic microscope for generating hyper-resolution microscope images. It uses 3d printing, easy to find, off-the-shelf components, and open source software to reduce the cost of the cost of an automated microscope from $10k to less than $100. The hardware and software is designed to automatically scan a slide sample and take photos at predetermined steps. Images are stitched together using a custom algorithm written using OpenCV, and the final gigapixel panormaic is sliced according to the GZI Protocol for rendering in a web browser (like Google Maps). Additionally, manual moving of the step motors is possible via joystick, and imaging via remote shutter release.

Insula

Insula is the first device to create music from multiple biofeedback sensors. It was built for my bachelor's thesis on a team with 3 other engineers.

Our system creates live audio output by processing biodata from EEG, EMG, ECG, and breath rate sensors.

Physiological inputs (heart beats, muscle contractions etc.) are mapped to musical outputs (drum beats, chords) for the purpose

of meditation, physiotherapy, and artistry. Insula paints a meaningful and holistic picture of bioinformation, enabling users to

control and direct their body's natural rhythms into acoustic art.

I extended the original project with a "V2", which includes a glove with IMU sensor which allows intentional control of the music.

Our tech-stack includes Arduino, C++, Python, Processing (Java), and OpenBCI.

Image Processing and Computer Vision Projects

Focus Stack

Focusstack is a image simple focus stacking tool built in Python, for creating fun images with my microscope. Focus stacking is a digital image processing technique which combines multiple images taken at different focus distances to give a resulting image with a greater depth of field (DOF) than any of the individual source images. My technique is a basic implementation, which aligns the image in the stack (SIFT), warps them to the same perspective, and uses the LaPlacian Gradient to measure the intensity of frequency changes in each image, which is a proxy for the focus. The least in-focus regions are masked, and the stack is recombined into a single image.

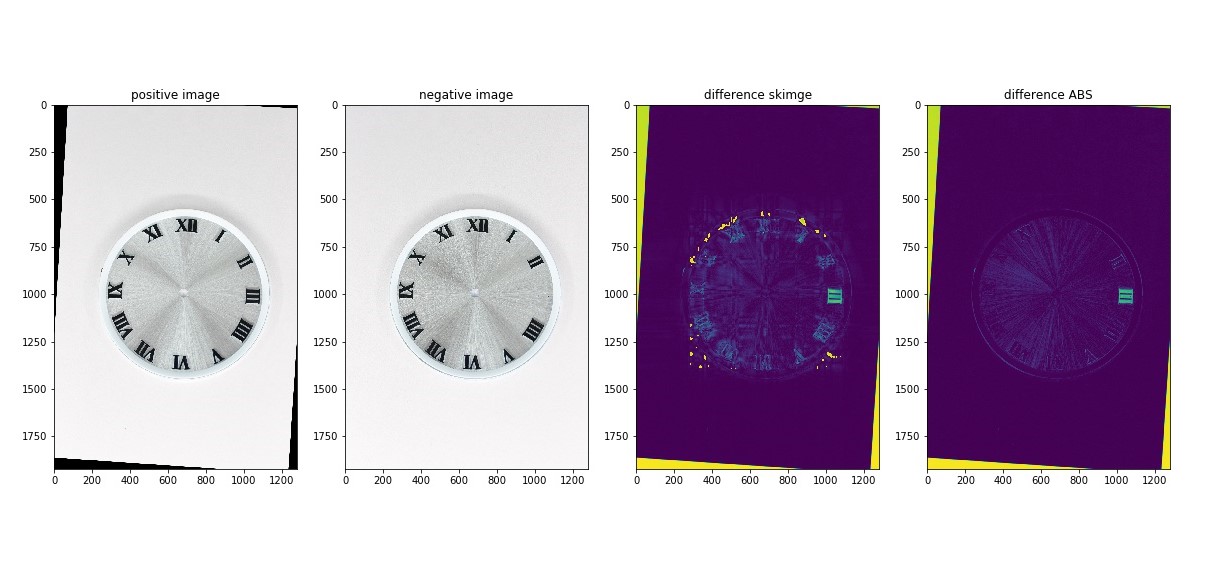

Watch Face Error Detection

This was a contract job to build a computer vision pipeline for detecting defects in watch faces on an assembly line. It uses OpenCV's SIFT Detector, a custom statistcal model based on a Gaussian Kernel Density Estimation and Homography Transform for image alignment, and a simple comparison for errors.

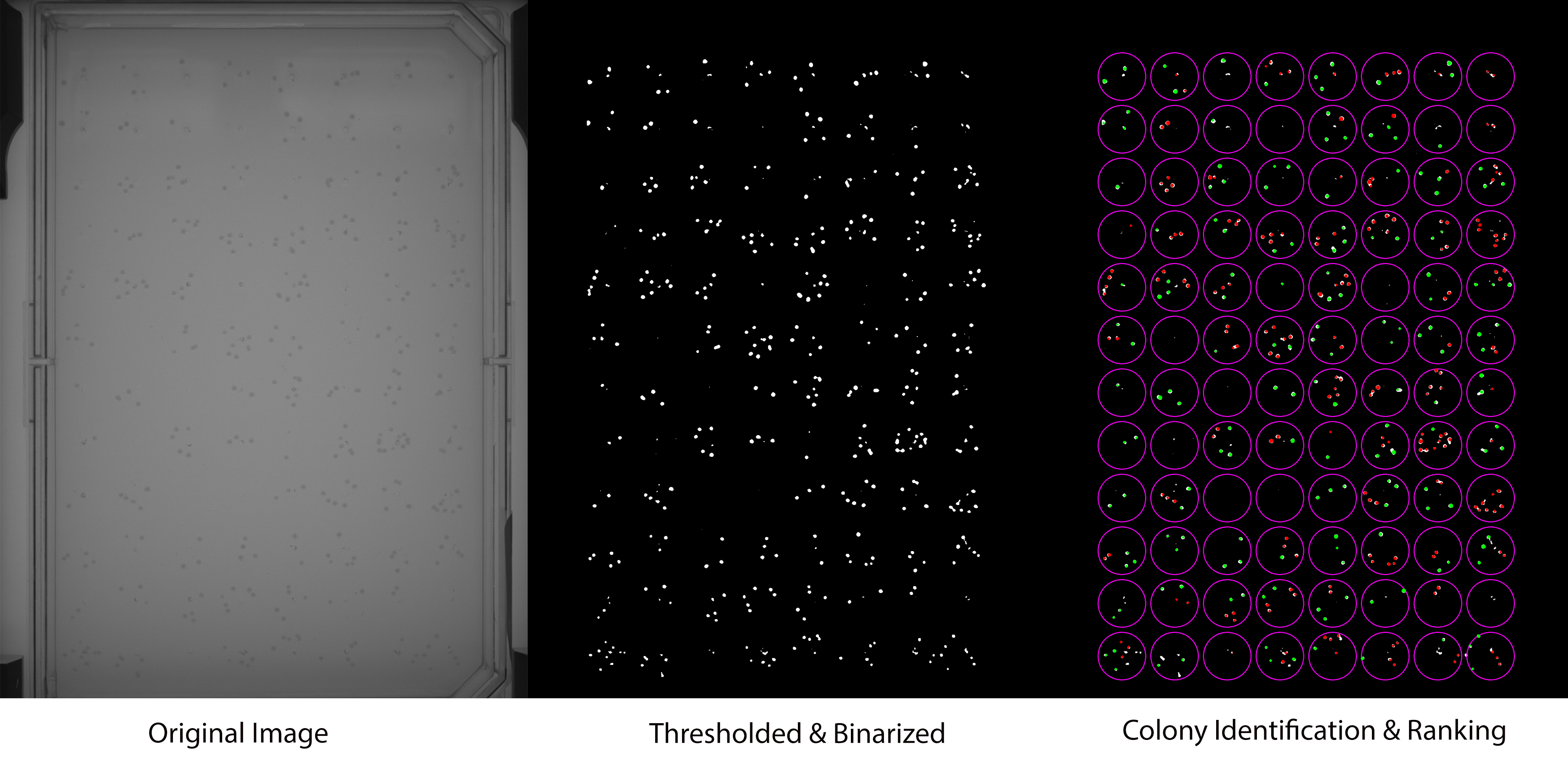

Robotic Vision for Cell Colony Counting

For my work at as an autmation engineer intern at Amyris, a synthetic biology company, I improved the computer vision algorithms for a automated cell-colony-counter robot. The algorithm uses a recursive Hough Circles to detect well plates, and cells, plus basic image filtering and thresholding. It significantly improved both quality and speed of identifications.

Optical Heart-Rate Detection Via Webcam

This is a real-time computer vision application to measure heart-rate from changes in optical intensity via a webcam. It uses Indepedent Component Analysis (ICA) to tease out the frequency of the heart rate based on the changing redness of skin during a heart contraction. Implementation of MIT paper: “Non-contact, automated cardiac pulse measurements using video imaging and blind source separation. Optics Express 18 (2010)"

Deep Learning Projects

I taught myself Machine Learning through textbooks, Andrej Karpathy's CS231n course, and Udacity courses. These are some projects I've worked on.

Deep Tesla

Developed a behavioral cloning algorithm to predict steering angle commands from dashcam images. Additionally, I tested the efficacy of using Singlar Value Decomposition as a preprocessing step for background removal of stationary objects in the frame (dashboard, reflections etc). Used MIT's Deep Tesla dataset.

U-Net for Semantic Segmentation

Developed a U-Net for semantic segmentation of cars on the road from a dashcam image. Implemented the original architecture from the U-Net paper on arXiv. Trained several iterations on a GPU, including ones for gradient checks, a control baseline, and augmentation/regularization.

Other Projects

Data Science and Programming Tutorials

For my work as a data science educator at General Assembly, I wrote several tutorials for my students explaining arcane data science topics that were not discussed in class. I wrote additional tutorials on Cython, Deep Learning Best Practices, Python Tricks, Computer Vision, C++, and more.

Covid-19 Data Dashboard with Dash

I built an interactive web-dashboard for visualizing open-source Covid-19 data. The tool use's Python's Dash library, which is an extension of Plotly.js.

The user can toggle and select between several views of the data.

Quantitative Finance Experiments

I built a novel algorithm using adaptive Bollinger Bars (Z-Scores) to predict stock prices. Back tested across 900 tickers and 12+ years of stock data from Quandl. I also built an interactive visualization app in with Python's Dash library.